Last Updated: 2023-10-17

⚠️ In this article, note that

~/WORKING_DIR/refers to any directory that will contain the cloned repo.

0. Install Isaac-gym and test venv

If you do not have Isaacgym installed please see the Dev/Isaac page.

1. Clone this repo, create venv, and install dependencies

1.1 Clone the repo (expand)

cd ~/WORKING_DIR/

git clone https://github.com/UWRobotLearning/ground_control_base

1.2 Create virtual env and install dependencies (expand)

⚠️️ Note: The conda environment must be using python3.8 to be compatible with isaacgym.

conda create -n a1 python==3.8

conda activate a1

conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia

cd ground_control_base

pip install -r requirements.txt

pip install -e .

⚠️️ TODO: Briefly justify why dependencies exist

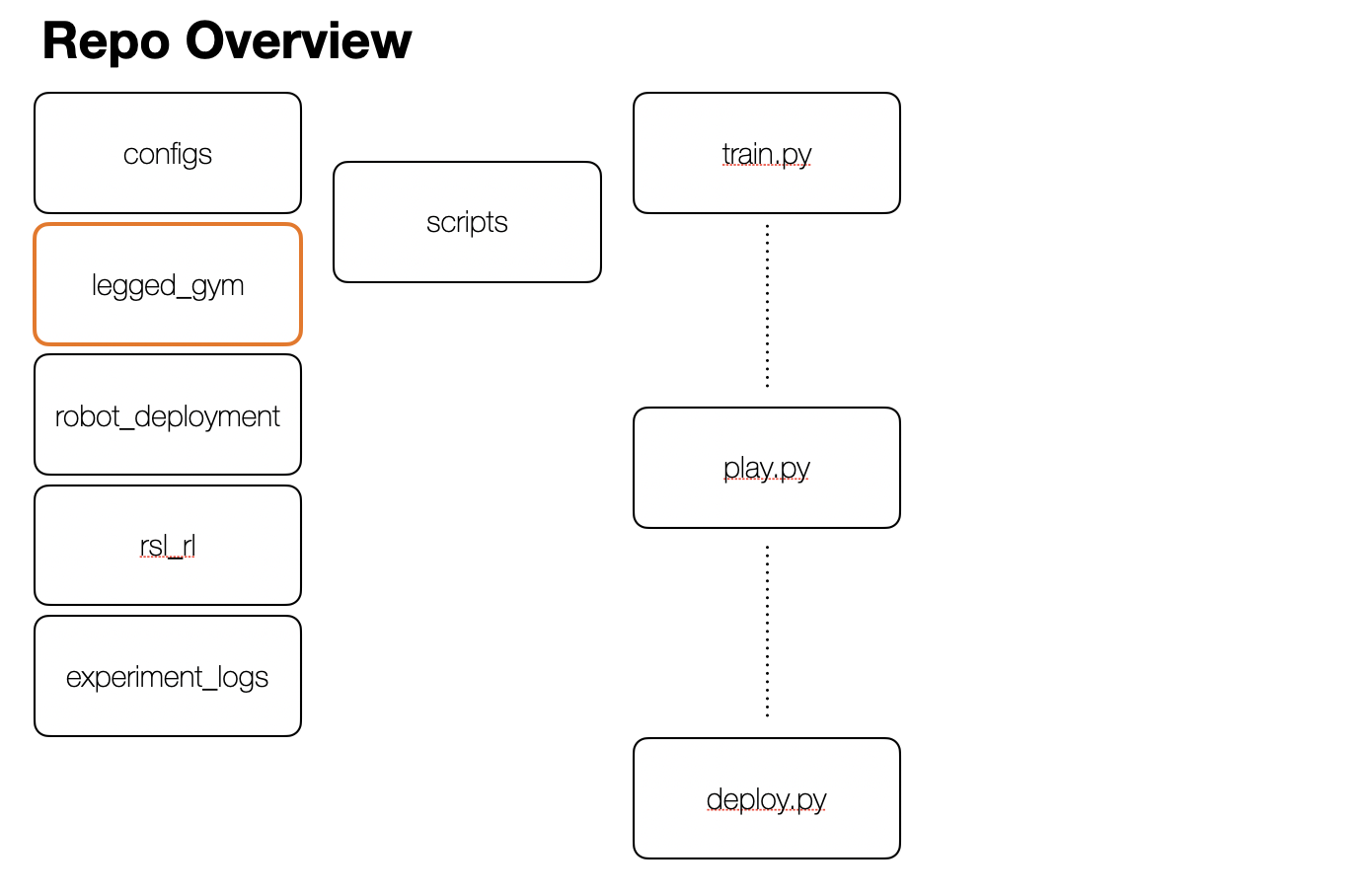

2. Run train.py script

This is the entry point to the base training loop with sane defaults. It utilizes hydra for:

- More powerful config interpolation / composition

- Parallelized experiment dispatch via Joblib, SLURM, Ray, or Submitit

- Plug-in to hyperparam search like Optuna, Ax, Nevergrad…

Note that this code can be run without hydra, and it is helpful to note that clean code creates a clear barrier between “config-logic” and envs / learning machinery. This maintains a clear decoupling which allows your contributions to be evergreen rather than ad-hoc.

See Config Management for a more detailed discussion of this concept.

2.0 Examine the train_nohydra.py (expand)

This code is an example of how to construct the Env / Runner classes and still configure them via the hierarchical dataclass TrainScriptConfig. Note that there is no hydra dependency anywhere.

vim train_nohydra.py

2.1 Run train.py (expand)

cd ~/WORKING_DIR

python ground_control/leggedgym/scripts/train.py

This will produce outputs in experiment_logs which contain:

user@computer:~/WORKING_DIR/experiment_logs$ tree

.

├── 2023-10-13_14-35-39

│ ├── events.out.tfevents.1697232945.u114025.45506.0

│ ├── exported_policy.pt

│ ├── model_0.pt

│ ├── ⋮

│ ├── model_1000.pt

│ ├── ⋮

│ ├── model_5000.pt

│ ├── resolved_config.pkl

│ ├── resolved_config.yaml

│ └── train.log

3. Run play.py script

This is the entry point for replaying training runs. It defaults to replaying the most recent model trained in the most recent (chronogically) folder located in experiment logs by default. This script reproduces the exact conditions of the specified experiment by deserializing the resolved_config and loading it into the configs defined at the top of the script into the MISSING fields. Note that play is only meant to replay in Isaac. For deploying to real or sim-to-sim (e.g. pybullet or mujoco), use deploy.py.

2.1 Run play.py (expand)

cd ~/WORKING_DIR

python ground_control/leggedgym/scripts/play.py

4. Inspect experiment_logs outputs

At this point, you might want to look at the performance on some metrics, review saved video artifacts, inspect logs, or look at learning curves. We will briefly touch on what artifacts are produced within experiment logs as well as recommend creating a new repo to capture these.